your current location is:Home > Finance > depthHomedepth

The embarrassing war of technology companies: the "good intentions" of algorithms almost ruined a father's life

Title image source | Lars Plougmann CC-BY-SA

Title image source | Lars Plougmann CC-BY-SAMark, who lives in San Francisco, never thought that he was in a hurry to see a doctor for his son, and sent a picture to the doctor, but he nearly ruined his reputation.

It happened at the beginning of last year, and because it was still at the peak of the epidemic, some non-emergency medical institutions closed their services, including the children's clinic of Mark's choice. Mark found his son's private parts swollen, hurriedly sought professional help, and made a video call with the doctor.

Before the video, the nurse asked Mark to send a photo for the doctor to see. Mark did.

However, what he didn't expect was that this photo caused him a big disaster.

Image and text irrelevant Image source: Bicanski / CC0 license

Image and text irrelevant Image source: Bicanski / CC0 license/ The father who loves his son, the "pedophile" in the eyes of the algorithm /

Two days after the photo was sent, Mark suddenly received a notice from Google.

His Google account was completely shut down for allegedly storing and distributing harmful content, severely violating user agreements and company policies, and allegedly breaking the law.

Since Mark is a loyal user of Google's family bucket, the consequences of Google's decision are quite tragic:

Not only did he lose his Gmail, contacts, and calendars, but even his phone number was frozen because he was a user of Google Fi's virtual carrier.

Here, the nightmare is far from over.

Not only did Google ban his account, but it reported the incident directly to the watchdog that specializes in child pornography/sex abuse content, which later contacted the San Francisco police department. Finally, at the end of last year, the San Francisco police retrieved all the information and records about Mark from Google, and officially launched an investigation against Mark.

For a year, Mark faced serious allegations of "pedophilia", it was difficult to carry out work and life, and even almost "disintegrated"...

The New York Times article describing the matter mentioned that it was Google's little-known system of cracking down on child sexual abuse content that put Mark in an embarrassing situation.

According to the definition of the U.S. government, Child Sexual Abuse Material (CSAM), including photos, videos, etc., as long as it involves images of sexually explicit behavior of minors, belong to this type of content. More specifically, content involving deception, extortion, display/incitement/promotion of sexualization of minors, and child abduction are all within the scope of CSAM , which is expressly prohibited by Google .

Image credit: Google

Image credit: GoogleIn order to prevent platforms, products and technologies from being used to spread CSAM, Google has invested a lot of resources to scan, block, remove and report CSAM - but this time it was not Google's scanning technology that caused Mark to suffer, but Google's There are omissions in the manual inspection process.

In major companies, the process of CSAM retrieval and post-event reporting includes a dual insurance system of algorithm scanning and manual inspection, and Google is no exception. However, in Mark's encounter, after the algorithm found the photo and automatically triggered the system to lock Mark's account and hand it over to manual inspection, the inspector did not seem to consider the scene at that time and did not find that the photo was sent to a medical professional people.

After the incident, Mark immediately appealed. However, Google not only refused to review the previous decision, but also refused to let him download and save his own data. For the closed account, the data was automatically deleted after two months, and Mark lost a lot of important information accumulated over the past years.

The matter dragged on for a whole year until the police officially launched an investigation at the end of last year.

During this year, Mark was almost "dead from the society", and it was difficult to explain completely and honestly to his colleagues and friends why his phone number and email address suddenly disappeared out of thin air.

It was only shortly before this year that local police completed their investigation and closed the case.

The result was no surprise: Mark was innocent.

Image and text irrelevant Image source: Direct Media / CC0 license

Image and text irrelevant Image source: Direct Media / CC0 licenseGoogle's explanation of using the law as a shield to push its loyal users into the abyss is very reasonable, but also weak. A company spokesman said U.S. child protection laws require companies like Google to report CSAM incidents.

According to Google's Transparency Report, in 2021 alone, the company blocked nearly 1.2 million hyperlinks involving CSAM, and submitted 870,000 reports to the National Center for Missing and Exploited Children (NCMEC), the relevant US watchdog, About 6.7 million pieces of content were involved, and about 270,000 accounts were closed.

Unfortunately, Mark unfortunately became one in 270,000.

Being among these 270,000 people, like those who went to prison and shouted their innocence, is unimaginable.

/ Do bad things with good intentions /

On its official page describing its efforts to combat CSAM, Google claims that the company has formed and trained specialized teams to use cutting-edge technology to identify CSAM.

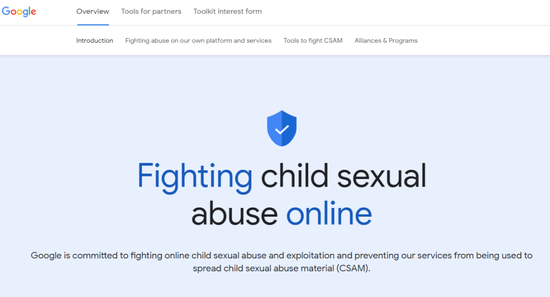

At present, in the scope of large American companies, there are two technical ways to retrieve CSAM: hash value matching, and computer vision recognition.

Hash value matching is relatively simple, that is to call the entries in the database maintained by the third-party organization on the market, and match the hash value with the pictures on the own platform, so as to detect the known CSAM related content. In this regard, Google used Microsoft's PhotoDNA in the early years. This technology has been around for more than 10 years, and is used by not only Google, but also companies such as Meta, Reddit, Twitter, and the NCMEC, the authoritative public watchdog in the field of CSAM.

Image credit: Microsoft

Image credit: MicrosoftAnd, Google's YouTube is also using the self-developed CSAI Match technology to achieve hash matching of streaming video.

Of course, new illegal images and videos are born every day, so in addition to hash matching, Google has also developed and deployed its own machine learning classifiers based on computer vision technology to retrieve "unseen" relevant content.

Google integrated this technology into the Content Safety API, which is also open to third parties. At present, companies including Meta, Reddit, Adobe, Yahoo, etc. are also users and partners of Google's self-developed CSAM retrieval technology.

Image credit: Google

Image credit: GoogleAs for this case, Google appears to have retrieved relevant content from Mark's Google Photos.

Google Photos is a photo backup and cloud photo album service launched by Google. It is pre-installed on models of its own brand and some other mainstream Android phone manufacturers. It is worth noting that after the user logs in to the Google account in Google Photos, the application will prompt the user to turn on the automatic upload backup - Mark may be at a loss here.

If the automatic upload function is turned on, in addition to photos downloaded in some third-party applications (such as Twitter, Instagram), including the camera photo collection, as well as other photos generated on the phone, will be automatically uploaded to the cloud by Google Photos.

According to the official website and the company spokesperson, Google not only explicitly restricts users from uploading and disseminating related content through Google Photos, but its CSAM strike system will also scan and match photos in Google Photos.

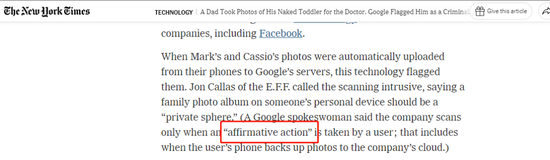

The problem is that, according to a Google spokesperson, CSAM's targeting of the system is limited to images uploaded by users "affirmative action."

From a pragmatic point of view, Mark turned on the automatic upload of Google Photos. As a result, because he was in a hurry to see a doctor, he took this photo, uploaded it automatically, forgot to delete it, and turned back to Google to get in trouble. Active behavior is a bit far-fetched.

Image source: The New York Times

Image source: The New York TimesScanning CSAM can protect children's safety and effectively combat pedophilia and all kinds of perversions. It sounds like a good thing, right?

However, in fact, in recent years, when the large Internet and technology companies in the United States are doing this, various problems, failures and scandals have continued. As a result, the automation of algorithms to combat CSAM has caused huge technical ethics and privacy rights. dispute.

If Google's Oolong incident is described as a "man-made disaster", then the accident at Apple at the same time last year can be called a "natural disaster".

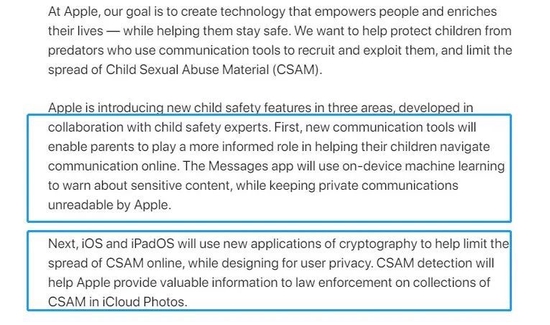

In early August last year, Apple suddenly announced that it would launch a client-side tool for scanning CSAM on the iOS platform.

Image credit: Apple

Image credit: AppleApple's prominent keyword is on the "end side": Unlike Google's practice of scanning user content stored in cloud servers, Apple said that it will only do this on the user's device, and the system will download the NCMEC database, and then completely Hash matching is performed locally.

However, Apple's so-called "end-to-side" and "privacy-focused" are just superficial rhetoric. Some experts have found that photos that users send to iCloud to save will also be detected. Other researchers found that Apple's matching algorithm NeuralHash has a design concept flaw. And this technology is not about to be launched at all, but was secretly implanted into the public version of iOS a long time ago, and Apple also specifically obfuscated the API naming in order to "incognito".

As a result, within a month of Apple's announcement, someone implemented a hash collision and a "preimage attack" on the NeuralHash algorithm.

In simple terms, hash collision is to find two random photos with the same hash value; while preimage attack is a "deliberately generated collision", that is, given a photo, and then generate another photo with its hash Photos with the same value but different content.

Even someone can find several pairs of natural NeuralHash "twins" (two different original images with the same hash value) directly in the popular ImageNet annotated image database...

The results of these attack tests directly overturned Apple's CSAM retrieval technology in principle and logic, making it worthless.

Image credit: Cory Cornelius

Image credit: Cory CorneliusThe results of early tests show that the hash collision rate of NeuralHash is similar to the false positive rate claimed by Apple, which is within an acceptable range. However, considering that Apple has more than 1.5 billion device users around the world, the base is too huge. Once NeuralHash has a false positive, or even an accident caused by a hash collision, it will affect a large number of users.

In general, Google and Apple, the two giant mobile platform-level companies, have indeed worked hard to scan and crack down on child pornography, and they are also worthy of encouragement.

However, the other side of this incident is very regrettable:

Because of a photo taken at random, work and life were at an impasse, and even almost ruined. This is probably something that Mark and many people with similar experiences did not expect at all.

And that's the awkwardness of the whole thing: in the face of out-of-bounds tech platforms and bad algorithms, good intentions can really do bad things.

Previous:Microsoft introduces more ads in mobile Outlook app that look like emails

Next:Why do American billionaires such as Bill Gates buy and farm land one after another?

related articles

Article Comments (0)

- This article has not received comments yet, hurry up and grab the first frame~