your current location is:Home > Finance > depthHomedepth

Protecting minors online: California at the forefront of America again

The tragedy of minors online

On January 20 last year, Antonella Sicomero, a 10-year-old girl from Sicily, Italy, opened TikTok with her mobile phone and strangled her neck with a belt in her bathroom, imitating the popular online "choking challenge" ( Blackout Challenge). She quickly lost consciousness. By the time the family found out, the tragedy was irreversible.

The shocking incident soon sparked a follow-up from Italian and even European regulators. On the third day after the tragedy, the Italian Internet regulator DPA issued a three-week ultimatum to TikTok: if TikTok cannot verify the age of users, it will be prohibited from providing service operations.

Since February last year, TikTok has carried out a comprehensive check on the age of 12 million users in Italy at that time, deleted 500,000 users whose age did not meet the requirements, and found 140,000 users who lied about their age. TikTok also promised to introduce more technical means for age verification in the future, and once a minor under the age of 13 is found to have opened an account, the account will be cancelled within 48 hours.

However, even apps developed for minors have many loopholes and problems. YouTube Kids is the most successful children's video app in the United States. This is a YouTube video platform of selected children's content, including a large number of educational animations, children's songs and learning content, with more than 35 million weekly active users.

Despite YouTube's strict moderation requirements for children's content, there is still a lot of inappropriate content. For example, the YouTube Kids content that U.S. parents have complained about over the past few years has included cars crashing and burning, characters making dangerous moves on the edge of a cliff, spooky grave scenes, children playing with razors in the bathroom, and more. These content, which is normal for adults, may bring psychological shadows to preschoolers and even induce them to imitate dangerous actions.

Teasing children into video addiction is also a big reason why YouTube Kids has been blamed. The app's default setting is to autoplay videos, meaning that after the current video finishes, YouTube Kids will continue to play algorithmically recommended content. If parents cannot intervene in real time, children will continue to watch the content recommended by the algorithm. Although the issue has been reported in the media since 2017, Google has not adjusted the default settings without regulatory intervention.

Regulatory legislation passed unanimously

Should social networking sites be responsible for such tragedies, what technical measures should they take, and how to effectively avoid similar tragedies and prevent minors from accessing inappropriate content on social networks? This is already a pressing issue in global internet regulation legislation.

This time, California is at the forefront of the United States once again. Perhaps, California is responsible for Internet regulation, because the world's major social networking sites and Internet companies are headquartered in California, the US headquarters of Youtube, Facebook, Instagram, Twitter, Snapchat and TikTok, and California's Internet legislation has always been in the United States. front row.

This week, the California Senate passed the California Age-Appropriate Design Code Act (AB2273) with 33 unanimous votes, which clearly stipulates how social networking sites and Internet platforms should protect minors. The California House of Representatives has approved the bill with 60 unanimous votes, and the bill will then be sent to California Governor Newsom to sign into effect, but will be implemented in 2024.

It should be emphasized that, despite the overwhelming majority of Democrats in California, unanimous bills are rare. Because California also has deep red constituencies, there are many Republican conservative members in the Congress, and the Republican leader of the U.S. House of Representatives comes from the agricultural area of central California. Although the two parties compete on many agendas, there is a rare consensus on Internet regulation, and both have a sense of urgency to protect minors online.

It is worth mentioning that this is also the first law in the United States on how social networking sites should protect the personal information and online activities of minors. At the federal legislative level, the Senate Legislative Group has advanced two draft laws in July this year, making clear provisions for social networks in terms of minors surfing the Internet: prohibiting Internet companies from collecting data on users aged 13-16 unless they obtain access to them. users; requiring Internet companies to provide minor users and their guardians with a hustle to eliminate platform activity data.

In addition, the draft of the Federal Senate's "Children's Internet Safety Act" specifically proposes that social media platforms must give minors the right to choose, allow them to opt out of the algorithmic recommendation function, and block a lot of content that is not suitable for minors. However, there is no exact timetable for these bills. California took the lead in passing relevant legislation this time, which will also help speed up the pace of legislation at the federal level.

Install a digital fence for minors

The California law makes it clear that social networks must put their privacy, safety and well-being above their business interests when dealing with underage users. If a social network violates this rule, it will face a fine of up to $7,500 per underage user involved.

Specifically, this law requires app and website developers to install "digital fences" for minor users under the age of 18, analyze the harm their services may bring to minor users, take proactive measures to protect their personal information, and Web browsing data. The law clearly mentions the recommendation algorithm and the function of finding friends that are commonly used on social networking sites. These methods are intended to increase user stickiness and increase usage time. However, for underage users, these functions will bring the risk of harassment and affect their behavior. Physical and mental health.

The California law has been welcomed by many children's rights groups in the United States. Immediately after the passage of the bill, Fairplay, an American child safety protection organization, issued a statement saying, "California's passage of the Age-Compliant Design Act is a huge leap in the direction of building the Internet for children and families."

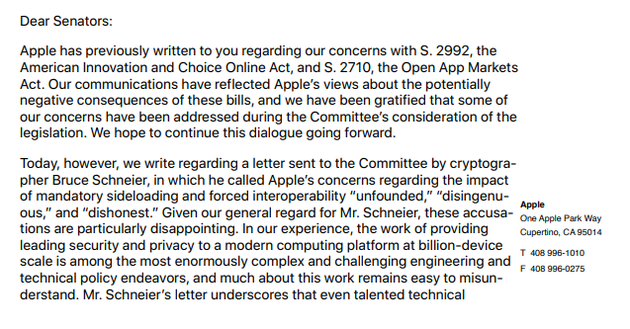

Social media giants such as Facebook and Twitter and trade groups representing their interests had previously lobbied privately to block passage of the bill. They argued that the bill would hinder innovation on the Internet, violate the U.S. Constitution's right to free speech, and do nothing to effectively protect families and children.

NetChoice, which represents the interests of internet platforms, issued a statement firmly opposed to these regulatory laws. “These laws will keep Peloton from recommending new exercise content to kids, and Barnes & Noble (US bookstore chain) from recommending continued reading to middle school students. California has always been a leader in technology development, but these laws will only keep innovators from being overly regulated. And leave California.

Must be 13 years old to register

Like other countries, underage users in the United States also exist widely on social media platforms such as Instagram, TikTok, Facebook, Snapchat, and Youtube. But in theory, not every teen can sign up to use these services. The minimum age requirement for user registration accepted by most social networking sites in the United States is 13 years old.

Taking TikTok as an example, users can use Facebook, Twitter, Google or Apple accounts when registering. When registering separately, they need to enter their date of birth and mobile phone number (or email address), and confirm that they are at least 13 years old to register. When registering new users on Instagram and Facebook, the last step is to enter the birthday as well. If you are under the age of 13, the platform will directly refuse to register.

However, although major social media platforms have set clear registration age requirements and refuse independent registration and use of minors under the age of 13; they only require users to actively submit their age, which means that there are many loopholes to be exploited. Platforms such as Instagram admit that it is entirely possible for minors to falsely report their age to register to use social networks, and it is a technical problem to verify the actual age of users.

The legal basis for user age restrictions in the United States is still the Children's Online Privacy Protection Act (COPPA) promulgated in 1998. Under this law, Internet companies providing services to users under the age of 13 face stricter regulatory requirements and legal liabilities. They need to obtain parental consent in advance and set up a "clear and complete" privacy policy to ensure the safety and confidentiality of children's data collected.

For this reason, most social networking sites in the United States set the minimum age for registration at 13. Minors can only watch some social media filtered health content suitable for minors in the company of their parents. Teenagers in the 13-16 age group also face restrictions on the use of social networking sites. Whether it's Instagram or TikTok, user accounts at this age are private by default, meaning only users they approve of can see themselves and private messages.

Some Internet companies will also develop separate products for children under the age of 13, such as the children's version of YouTube Kids, which can only be registered with the help of parents, used under parental supervision, and cannot be commented on (which means that there is no social interaction function). After TikTok reached a settlement with the U.S. Federal Trade Commission in 2019, it launched a healthy content section only for the U.S. market under the age of 13, but it is forbidden to search for other videos, nor to comment, nor to post videos by itself.

Regulatory legislation is seriously lagging behind

But this mechanism has serious loopholes. In 2020, Thorn, a non-profit organization dedicated to protecting children, conducted a survey on the use of social networking sites among 2,002 minors aged 9-17 in the United States; 742 of them were 9-12-year-olds and 1,260 were 12-17-year-olds.

The survey found that despite the explicit age restrictions imposed by various social media companies, the majority of children are still using these social apps (either under parental supervision or falsely stating their age). Among children aged 9-12, the proportion of daily use of social networking sites is Facebook (45%), Instagram (40%), Snapchat (40%), TikTok (41%), YouTube (78%). What's more, the survey found that 27% of underage users are actually using dating apps, with the minimum sign-up age for the latter being 18.

In addition, Thorn conducted an online harassment survey of 1,000 minors aged 9-17; 391 of them were children aged 9-12 and 609 were teenagers aged 13-17. The results of this survey are even more shocking: 16% of girls aged 9-12 have been molested by adults with sexual attempts, and 34% of girls aged 13-17 have had similar experiences.

So how do teens deal with these online harassments? 83% said they would block and report, and only 37% would tell their parents or relatives. And many of the children interviewed said that even if they blocked and reported harassment, they would still be harassed again by the other party changing their account.

Of these sexually attempted harassment, Instagram and Snapchat were the most problematic, with 26% of similar harassment occurring on both platforms, followed by TikTok and Facebook Messenger (both 18%). It is clear that photo and video social platforms are the most vulnerable platforms for teens to be sexually harassed online.

Regulation or punishment after the fact

Even in the United States, which pays attention to the protection of minors and privacy, the legislative work on the use of social networks by minors is still seriously lagging behind.

Platforms from Facebook to TikTok to YouTube have had numerous violations involving teenage users, for which they have paid hefty fines or settlements. But relative to their revenue, these fines are undoubtedly insignificant. These Internet companies clearly know that there are underage users on their platforms, but they are still collecting the data of these users for targeted advertising and content recommendation.

Other social networking sites have the same problem in this regard. In 2019, TikTok reached a settlement with the U.S. Federal Trade Commission, paying a fine of $5.7 million for previous violations of the privacy of underage users (the avatars and information of underage users are not set to be private by default). In February 2021, TikTok again agreed to pay $92 million to settle a class-action lawsuit for illegally collecting information on minors.

In 2018, 23 U.S. consumer and child protection agencies jointly charged YouTube with the U.S. Federal Trade Commission, arguing that the site’s age restriction measures are not perfect, and that it illegally collects the use of children’s users without informing parents and asking for authorization. Data used to deliver YouTube's personalized ads.

Google is also adopting procrastination tactics when it comes to whether to turn off the default push and autoplay of the children's app YouTube Kids, because closing it will directly affect the time children spend using the app. In early 2021, when Google CEO Pichai participated in a congressional hearing, Rep. Lori Trahan, a Massachusetts Democrat, questioned Pichai on the matter. Because her own kids are addicted to watching YouTube Kids videos.

In addition to pressuring Pichai in person, the U.S. House of Representatives Oversight Committee's panel on consumer and economic policy also sent a letter to YouTube CEO Susan Wojcicki, arguing that autoplay could make children addicted to watching videos. A panel from the U.S. antitrust watchdog, the Federal Trade Commission (FTC), has also issued a regulatory opinion on YouTube Kids' self-play feature. However, Google didn't quietly roll out the ability to turn off autoplay until last August.

Meta has become a public enemy

In this regard, the Meta social matrix has become the public enemy of the United States. Last year, Frances Haugen, the former product manager of Meta's Civic Integrity department, exposed tens of thousands of pages of documents inside Meta and publicly testified before the US Congress, causing an uproar. Hogan testified that the social networking giant put its own economic interests above the rights of its users. Even though it knew that it might bring harm and adverse consequences, in order to pursue network traffic and user stickiness, it still insisted on not changing the recommendation algorithm, and even Pushing content to minors that they should not see. According to Hogan's testimony, Meta's interest algorithm even pushes pictures of self-harm to minors, and pushes anorexia content to girls with body anxiety.

It is precisely because Facebook has too much black history that in May last year, when Facebook announced plans to develop an Instagram version for children under the age of 13, it was unanimously opposed by US regulators and all walks of life. From the Federal Trade Commission to members of the Federal Senate and House of Representatives to various Internet rights protection agencies, they have successively issued open letters urging Facebook to immediately stop developing a children's version of Instagram. It is also worth mentioning that the attorneys general of 44 states in the United States also jointly pressured Zuckerberg to ask Facebook to abandon the project. A few months later, last September, Meta had to announce that it was suspending development of a children's version of Instagram.

When it comes to illegally collecting and leaking user data, Facebook is definitely bad at it. In 2019, they were fined $5 billion by the US Federal Trade Commission for this, setting a record for the amount of US regulatory settlements. Last year, Facebook agreed to pay $650 million in a class-action lawsuit against 1.6 million users for scanning users' online albums for facial recognition without their authorization.

Even more shocking, from 2016 to 2019, Facebook paid $20 a month for 13-25-year-olds to install their own data-collecting app through a number of beta programs to gain full access to their mobile phone network usage. data. Get complete data on a large number of users at a very low price. Facebook knew that it involved minors, but still collected it for three years.

Facebook first illegally collected user data through VPN applications. After being forcibly removed by Apple, Facebook bypassed the Apple App Store with the enterprise version of the root certificate, and collected young people's network data by paying. This scandal completely angered Apple, and it once revoked Facebook's enterprise version development rights, and it was also the fuse for the continued bad relationship between the two companies. Last year, Apple simply added the option to allow users to opt out of data tracking in an iOS update, giving Meta's social ads a significant hit. According to Meta CFO David Wehner, Apple’s move could cost up to $10 billion in revenue for Meta in 2022.

related articles

Article Comments (0)

- This article has not received comments yet, hurry up and grab the first frame~