your current location is:Home > TechnologyHomeTechnology

The latest AI technology non-invasively decodes "brain language" with an accuracy rate of 73%!

Artificial intelligence technology can decode words and sentences from participants' brain activity with incredible accuracy, with an accuracy rate of 73%, which means that artificial intelligence is one step closer to "non-invasive decoding" brain language and consciousness of brain activity data!

Artificial intelligence technology can decode words and sentences from participants' brain activity with incredible accuracy, with an accuracy rate of 73%, which means that artificial intelligence is one step closer to "non-invasive decoding" brain language and consciousness of brain activity data!Sina Technology News Beijing time on the morning of September 14th, according to reports, at present, artificial intelligence technology has made non-invasive brain decoding technology a step further! Although the technology is not yet able to make people who cannot communicate verbally talk and laugh like normal people, it will allow scientists to accurately decode the content of their speech.

This artificial intelligence technology decodes words and sentences from participants' brain activity with incredible accuracy, but it is still not 100% accurate. People only need a few seconds of brain activity data to make inferences through artificial intelligence technology. Knowing what people hear, they found in a pilot study that participants had an average 73 percent chance of getting the right answer from a selective test.

AI has outperformed what many thought was possible, says Giovanni Di Liberto, a computer scientist at Trinity College Dublin, Ireland, who was not involved in the research.

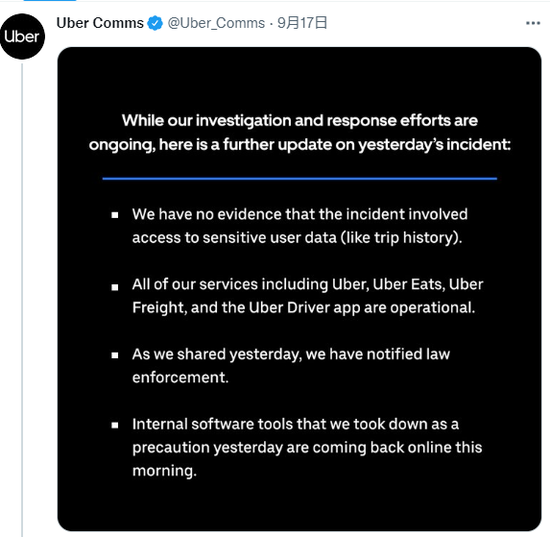

On August 25, some media reported that Meta, the parent company of Facebook, has developed a new artificial intelligence technology, which is expected to eventually be applied to tens of thousands of people around the world who cannot communicate through speech, typing or gestures, including: minimum awareness state, locked-in syndrome, or "vegetative state", now commonly referred to as the unresponsive awake syndrome population.

It is reported that most of the current technologies to help people with speech impairments are physically invasive to a certain extent and require high-risk brain surgery to implant electrodes, said Jean-Remi King, an AI researcher and neuroscientist at Meta. The newly developed artificial intelligence technology is expected to provide a feasible solution to help patients with communication disorders, rather than using invasive methods, said .

Jenny and colleagues have developed a computational tool that can detect words and sentences in 56,000 hours of speech recordings in 53 languages. The tool, also known as a language model, learns how to measure at the finer level (e.g., letters or syllables) and more broadly Levels (eg, words or sentences) identify specific features of language.

The research team applied an artificial intelligence system with language models to databases from 4 institutions, including the brain activity of 169 volunteers, in which participants listened to different stories and sentences, such as: author Ernes T Hemingway's "The Old Man and the Sea" and Lewis Carroll's "Alice in Wonderland," during which participants' brains were scanned with magnetoencephalography (MEG) or electroencephalography, which can Measure the magnetic or electrical components of brain signals.

Then, with the help of a computational method that helps explain physical differences between actual brains, the research team tried to decode what they heard using three seconds of brain activity data from each participant, and they instructed the AI system to translate the story. The voices in the recordings were matched to patterns of brain activity calculated by artificial intelligence that corresponded to what people heard, and then, based on more than 1,000 possibilities, predicted what the participants were likely to hear in a short period of time.

The researchers found that using the magnetoencephalography test, the accuracy rate of the top 10 possible answers selected by the participants reached 73%. Logger performance is very good. Liberto said: "But we are not optimistic about the practical application of the system. What can it be used for? Magnetoencephalography is a bulky and expensive machine, and the application of this technology to the clinic requires technological innovation and improvement. , making the device less expensive and easier to use.

Jonathan Brennan, a linguist at the University of Michigan in Ann Arbor, said: "In this latest study, it's important to understand what 'decoding' really means, a term often used to describe deciphering directly from information sources. The process of information, here specifically deciphering language from brain activity, can be achieved with artificial intelligence technology because the system can provide a limited range of possible answers with greatly improved accuracy. For language, if we want to This AI system scales up to practical applications, which is difficult to achieve because language applications are limitless."

What's more, AI can decode information about participants who are passively listening to audio, which is not directly relevant to non-verbal patients. In order for it to be a meaningful communication tool, scientists need to learn how to decipher what patients want to express from brain activity. messages, such as hunger, uncomfortable expressions, or simple "yes" or "no" expressions.

In fact, this artificial intelligence technology decodes speech perception, not speech generation. Although speech generation is the ultimate goal of scientists, for now, it is urgent to further improve related science and technology.

related articles

Article Comments (0)

- This article has not received comments yet, hurry up and grab the first frame~