your current location is:Home > carHomecar

Sony AI smashes top real racers, beats humans by 1.5 seconds

"What's the situation?" Emily Jones couldn't believe she was behind.

Emily Jones, a multi-win top GT gaming racer, slaps her esports steering wheel and stares at the screen in front of her: "I tried my best, but I can't catch it - it's How did you do it?"

In the game Gran Turismo, Jones drives her car at 120 miles per hour. In order to catch up with the fastest "player" in the world, she hit the speed of 140, 150 miles per hour several times.

(Source: SONY AI)

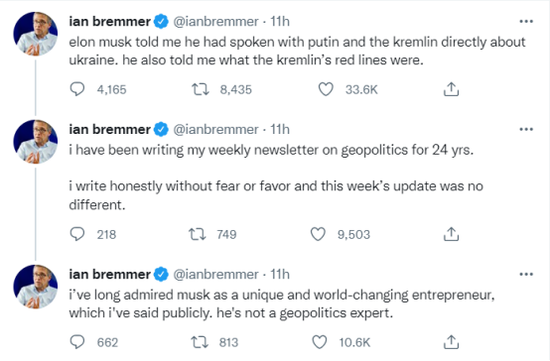

This "player" is actually an artificial intelligence called GT Sophy. It was released in 2020 by Sony's Artificial Intelligence Research Lab and uses artificial intelligence to learn how to control a car in a GT game. In a series of closed-door events in 2021, Sony has put the AI to compete with top GT drivers.

In July 2021, Jones took part in an event organized by Sony as part of the esports team Trans Tasman Racing, but she didn't know what to expect at the time.

"No one gave me any information. Just told me I didn't need to do any practice and don't care about lap times," she recalls. "My attitude is also very simple. Keep it a secret. It's definitely not a bad thing."

In the end, the GT Sophy beat Jones' best time by 1.5 seconds -- a GT record set by a human racer is basically measured in milliseconds, and 1.5 seconds means a huge gap.

But Sony quickly learned that speed alone wasn't enough to make the GT Sophy a winner. It surpassed human drivers on a single track, breaking records on three different tracks with incredible results.

Yet when Sony put it in a race against multiple human drivers, it lost—a multiplayer race that requires not only speed, but also a certain amount of intelligence. The GT Sophy sometimes incurs penalties for being too aggressive and reckless, and sometimes too cowardly, conceding when it's not needed.

Sony retrained the AI and has a second round in October 2021. This time, GT Sophy easily beat the human players. What changes has it made?

The first is that Sony has built a larger neural network and the program is more powerful, but the fundamental difference is that the GT Sophy has learned "track manners".

Sony AI

U.S. chief Peter Wurman said this etiquette is widely observed by human drivers, and its essence is the ability to balance aggression and concessions, dynamically choosing the most appropriate behavior in a constantly changing arena.

It's also what makes GT Sophy surpass racing game AI. The driver's interaction and etiquette on the track is a special case, he said, where the dynamic, situation-aware behavior exhibited is exactly what a robot should have when interacting with a human.

Recognizing when to take risks and when to act safely can be useful for AI, whether it's on a manufacturing floor, home robotics, or driverless cars.

Warman

"I don't think we've learned the general principles of how to deal with the human norms that must be followed. But it's a good start, and hopefully it gives us some insight into the problem," said Mr.

GT Sophy is just one of many AI systems that have beaten humans, from chess to StarCraft and Dota 2, where AI has beaten the best human players in the world. But the game GT presents Sony with a new challenge.

Unlike other games, especially those that are turn-based, GT requires top players to control the vehicle in real-time while pushing the limits of physics (super speed). In a competition, all other players are doing the same thing.

The virtual race car sprinted past 100 miles per hour, just inches from the edge of the curve. At these speeds, even the slightest error can lead to a collision.

It is reported that GT games are known for capturing and replicating real-world physics in detail, simulating the aerodynamics of racing cars and the friction of tires on the track. The game is sometimes even used to train and recruit real-world racers.

"It does a great job of being realistic," says Davide Scaramuzza, who leads the robotics and perception group at the University of Zurich in Switzerland. He was not involved in the GT Sophy project, but his team used GT games to train AI drivers, which have not yet been tested on humans.

GT Sophy engages the game differently than a human player. Instead of reading pixels on the screen, it gets data about its own position on the track and the positions of surrounding cars. It also receives information about the virtual physical forces affecting its vehicles.

In response, the GT Sophy controls the car to turn or brake. This interaction between GT Sophy and the game takes place 10 times per second, which Warman and colleagues claim is similar to the reaction time of a human player.

Sony used reinforcement learning to train the GT Sophy from scratch through a trial-and-error approach. At first, AI could only struggle to keep the car on the road.

But after training on 10 PS4s (running 20 instances of the program each), the GT Sophy improved to the level of the GT's built-in AI in about 8 hours, equivalent to an amateur player. Within 24 hours, it was near the top of a leaderboard with the best scores of 17,700 human players.

It took the GT Sophy 9 days to keep getting shorter laps. Finally, it's faster than any human player.

It can be said that Sony’s artificial intelligence has learned how to drive within the limits allowed by the game, completing actions beyond the reach of human players. What impresses Jones most is the way the GT Sophy corners, bringing the brakes forward to allow for tighter lines to accelerate out of corners.

"The GT Sophy treats the line in a weird way and does things I never even thought of," she says. For example, the GT Sophy often drives a tire onto the grass at the edge of the track and slides into a corner . Most people don't do that because it's too easy to make mistakes. It's like you're in control of a crash. Give me a hundred chances and I may only succeed once. "

GT Sophy quickly mastered the game's physics, though the bigger issue was the referee. In professional racing, GT races are overseen by human judges, who have the right to deduct points for dangerous driving.

Cumulative penalties were a key reason why the GT Sophy lost its first round in July 2021, even though it was faster than any human driver. In the second round a few months later, it learned how to avoid penalties and the results were very different.

Warman

Several years of effort have been put into the GT Sophy. On the wall behind his desk hangs a painting of two cars fighting for a spot. "It's the GT Sophy that's overtaking the Yamanaka," he said.

He was referring to top GT Japanese driver Tomoaki Yamanaka, one of four Japanese professional sim racers to race GT Sophy in 2021.

He couldn't remember which game the painting was from. If it's an October 2021 match, Yamanaka will likely enjoy it, as he faces a strong but fair opponent. If this were a July 2022 event, he would probably swear at the computer for being inexplicable.

Yamanaka's teammate Takuma Miyazono gave us a brief description of the July 2022 game through the translation software. He said: "We were knocked off the track a couple of times by the (GT Sophy) because of the way it was cornering too aggressively. It annoyed us because the human player would slow down during corners to avoid going off the track. "

Warman

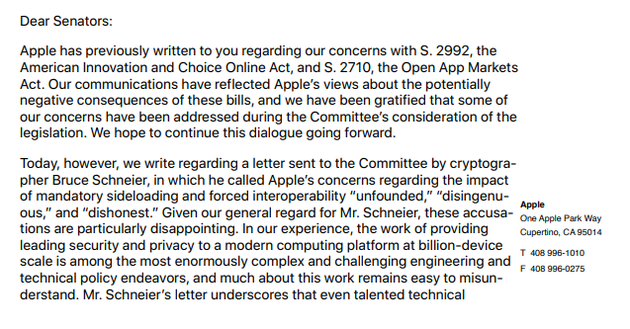

That said, training an AI to play fair without losing a competitive advantage is extremely difficult. Human judges make subjective decisions that depend on the environment, making it difficult for humans to translate them into things that AI can learn, such as which actions can and cannot be done.

The Sony researchers tried to give the AI many different cues to invoke and tweak, hoping to find a combination that worked. If it veered off the track or hit a fence, causing a vehicle collision or potentially being penalized by a referee, it was penalized.

They experimented, observed and tuned the strength of each penalty, and examined how the GT Sophy's driving style would change.

Sony has also increased the competition the GT Sophy faces in training. Before that, it was mainly trained on older versions of itself.

Before the rematch in October 2021, Sony will invite top GT drivers to help test the artificial intelligence every one or two weeks, and then the comprehensive results will be continuously adjusted.

"This gave us the feedback we needed to find the right balance between aggression and concession," Warman said.

This worked. Three months later, when Miyazono raced the GT Sophy, the latter's aggressive performance was gone - but it wasn't simply backing down. "When two cars enter a corner side by side, the GT Sophy leaves enough room for a human driver to pass," he says. "It makes you feel like you're racing against another real person."

He added: "The driver gets a different kind of passion and fun in the face of that reaction. It really impressed me."

Scaramuza

Very impressed with Sony's work. "We use human abilities to measure advances in robotics," he says. But, his colleague Elia Kaufman points out, it's still human researchers who dominate the training of GT Sophy's learned behavior.

"Good track etiquette is taught to artificial intelligence by humans," he said. "It would be really interesting if this could be automated." Not only would such a machine have a good track style, it would What's important is being able to understand what the playing field looks like and being able to change its behavior to suit the new setup.

Scaramuza

The team is now applying its GT racing research to real-world drone racing, using raw video input rather than simulated data to train an AI to fly. In June 2022, they invited two world champion drone pilots to compete against the computer.

"After seeing our AI competition, their expressions said it all. They were blown away," he said.

He believes that real advances in robotics must extend to the real world. "There's always going to be a mismatch between the simulation and the real world," he said, "and that gets forgotten when people talk about the incredible advances in AI. On the strategy side, Yes. But in terms of deployment to the real world, we're nowhere close."

For now, Sony is still insisting that the technology be used only in games. It plans to use GT Sophy in future versions of the GT game. "We want this to be part of the product," said Peter Stone, executive director of Sony AI America. "Sony is an entertainment company, and we hope this makes gaming more fun."

Jones believes that once people get the chance to watch the GT Sophy drive, the entire sim racing community can learn a lot from it. "On many tracks we'll find a lot of the driving skills that we've had over the years are flawed and there are actually faster ways."

Miyazono is already trying to replicate the way AI navigates corners because it has shown it can be done. "If the baseline changes, everyone's skills will improve," Jones said.

Previous:Musk's latest 21 questions! First response to Tesla's succession plan

Next:Tesla Autopilot is suspected of false propaganda, or its sales license will be revoked

related articles

Article Comments (0)

- This article has not received comments yet, hurry up and grab the first frame~